From 2.5 years to 43 days: mapping all 14 trillion Minecraft chunks is finally feasible.

From 2.5 years to 43 days: mapping all 14 trillion Minecraft chunks is finally feasible.

Generating every Minecraft chunk sounds like a task for supercomputers—or a lifetime—but with the right optimizations, it’s no longer out of reach. By combining MPI parallelism with Cubiomes, I’ve boosted biome query throughput from 0.18 million per second to ~3.73 million per second, cutting the projected runtime from 2.5 years to just 43 days. This is just the beginning of scaling Minecraft world generation to levels previously thought impossible.

Parallelsing with OpenMPI

The first and most straightforward step was to parallelise the chunk generation across all available CPU cores using OpenMPI. Instead of having a single process generate all the chunks sequentially, the workload was divided evenly, with each MPI rank responsible for producing a subset of the total chunks. This approach scales almost linearly with the number of cores, since chunk generation is embarrassingly parallel as there are no dependencies between chunks, so each process can work independently without costly synchronization.

By distributing the computation in this way, I was able to fully saturate the CPU and drastically improve throughput. For example, a benchmark of one million biome queries produced the following result:

Performed 1000000 biome queries in 0.649 seconds.

Throughput: 1.54 million queries per second.Profiling

The first step before attempting to optimize any piece of code is to understand the source of bottlenecks. The easiest tool for the job was gprof. With the output from gprof converted into a flame graph, this is what I got:

main() [100%]

└─ genChunks() [100%]

└─ getBiomeAt() [100%]

└─ genBiomeNoiseScaled() [100%]

├─ getSpline() [83%]

│ ├─ sampleDoublePerlin() [66%]

│ │ └─ samplePerlin() [66%]

│ └─ get_resulting_node() [17%]

└─ voronoiAccess3D() [17%]What the graph actually shows is the percentage of runtime of each function in the total execution time. It is immidiately apparent that

Perlin noise

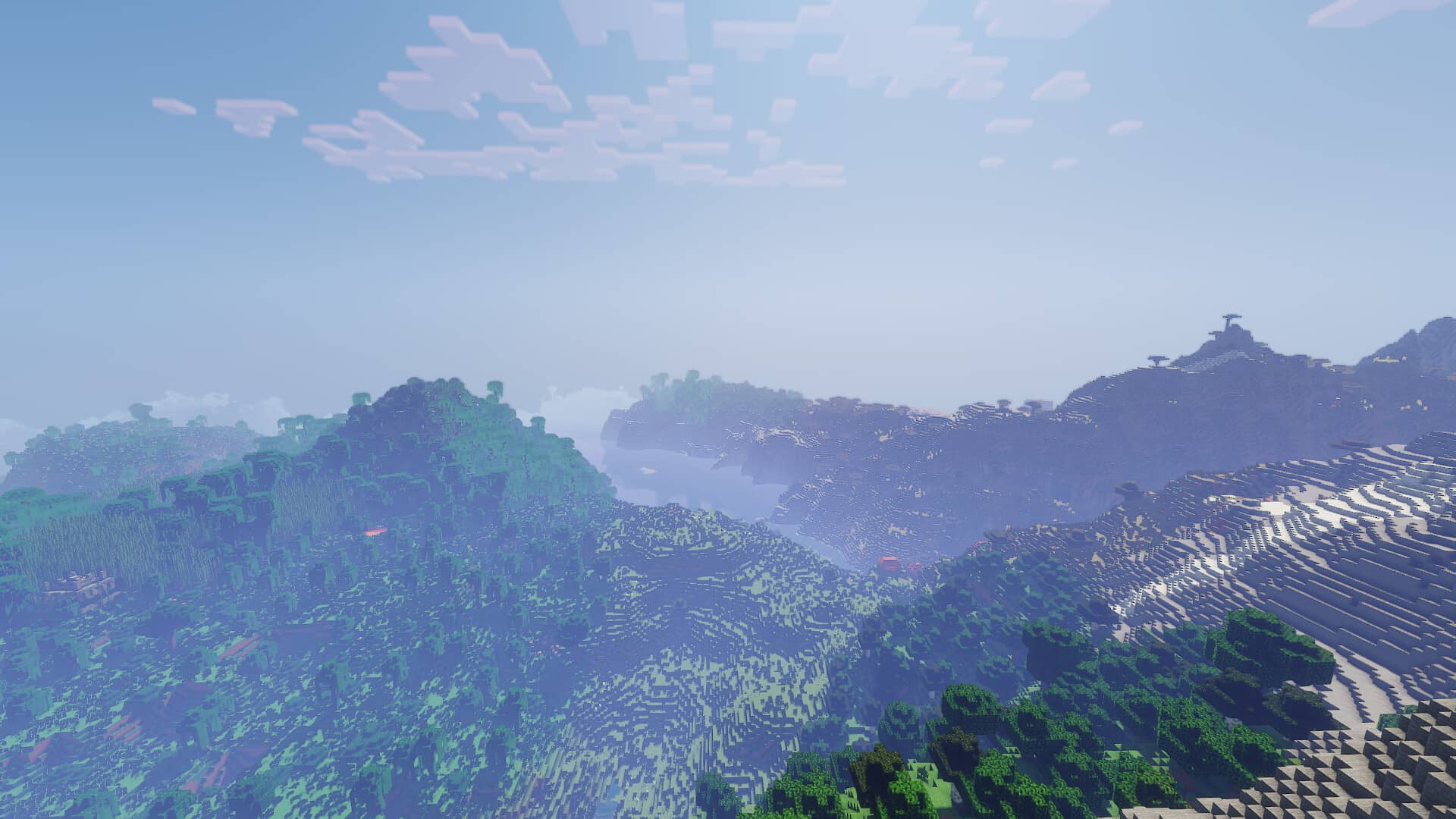

So what exactly is this “perlin noise”? And can’t we just use pure random numbers to generate terrain as that would be much faster?

The answer is graduality. Pure random noise by nature is … random. Each pixel ( I’m referring to the noise as if it was visualized as an image ) is unrelated to the ones around it. A terrain generated using random noise would be too choatic to look natural. It would have towering ice topped mountains neighbouring deserts. Rivers would instead of flowing with gentle turns, would be highly erratic.

On the other hand perlin noise is gradient noise, definable for any number of dimensions. The changes in perlin noise are gradual and with some imagination, representative of real world geography. This is the perfect candidate for terrain generation as it allows us to get smooth noise that is random, yet controlled enough to remain digestible.

Optimizations

Now things get really fun.

Cubiomes uses a

static inline double indexedLerp(const uint8_t idx, const double a, const double b, const double c)

{

switch (idx & 0xf)

{

case 0: return a + b;

case 1: return -a + b;

case 2: return a - b;

case 3: return -a - b;

case 4: return a + c;

case 5: return -a + c;

case 6: return a - c;

case 7: return -a - c;

case 8: return b + c;

case 9: return -b + c;

case 10: return b - c;

case 11: return -b - c;

case 12: return a + b;

case 13: return -b + c;

case 14: return -a + b;

case 15: return -b - c;

}

UNREACHABLE();

return 0;

}This seems okay at first glance. It appears to be notoriously simple and does not really contain any real logic that could cause slowdowns. But, In the case of this version, the compiler typically generates a jump table (or a series of conditional branches) to select the correct arithmetic expression. That means at runtime, the CPU must execute an indirect branch to jump to the right case. Indirect branches are notoriously hard for the branch predictor to guess correctly, since the target depends on runtime data (idx & 0xf). When the predictor misses, the CPU pipeline flushes and reloads, stalling execution. On tight inner loops (like noise generation or interpolation), these mispredictions accumulate into a significant performance hit.

On top of that, each case in the switch bloats the function body, putting more pressure on the instruction cache (I-cache). The CPU fetches and decodes more instructions than necessary, even though only one arithmetic form is used per call. Larger code footprints increase the chance of cache evictions, especially in performance-sensitive workloads where this function is called billions of times.

Thus I ditched this approach and implemented a simple lookup table for the three vectors a, b and c.

constexpr int8_t mul_a[16] = { 1, -1, 1, -1, 1, -1, 1, -1, 0, 0, 0, 0, 1, 0, -1, 0 };

constexpr int8_t mul_b[16] = { 1, 1, -1, -1, 0, 0, 0, 0, 1, -1, 1, -1, 1, -1, 1, -1 };

constexpr int8_t mul_c[16] = { 0, 0, 0, 0, 1, 1, -1, -1, 1, 1, -1, -1, 0, 1, 0, -1 };

static inline double gradientDot(const uint8_t idx, const double a, const double b, const double c)

{

const uint8_t i = idx & 0xf;

// The cast to float is important here

return static_cast<float>(mul_a[i]) * a + static_cast<float>(mul_b[i]) * b + static_cast<float>(mul_c[i]) * c;

}The lookup-table approach collapses all that branching into one sequence of straight-line code: an array load and a few multiply-adds. This means the CPU pipeline never needs to speculate on where to jump, reducing branch misprediction penalties to zero. The function body is also much smaller and denser, making it more cache-friendly. As a result, the optimization not only avoids unpredictable branching but also improves instruction cache locality, which together lead to smoother and more predictable performance.

Additionally I also replaced all calls to

static inline int fastFloor(const double x) {

const int i = static_cast<int>(x); // truncate toward zero

return i - (i > x); // subtract 1 if truncated value is greater than x

}This also boosts performance as the defined function is

One more factor is that we are only concerned about flooring an integer here. There is no need to handle floating points, infinities and NaN. Our value will never be in one of those situations unless something else has gone horribly wrong. This allows us to not use the much heavier inbuilt function.

Scale

One thing I overlooked earlier was the fact that we can define the resolution at which we want to get the biome data. In part 1 I used block coordinates for simulating throughput. Since a singular block is the highest possible detail resolvable, it is the slowest. Of course rendering the world biome map on a block-by-block scale resolution would the most accurate result but it simply unneccesary. When trying to generate a map of the entire Minecraft world, even doing so on a chunk’s scale is good enough. We can obviously re-render a selected region as a higher resolution if we want to.

Compiler Flags

A less obvious step was to use compiler flags to allow the compiler to perform more aggresive optimizations. The original cubiomes source actually didn’t use a lot of helpful flags that help squeeze the last bit of performance out.

Optimization flags

-funroll-loops – Unroll loops to reduce branching and improve vectorization (can increase binary size).-flto – Link-Time Optimization; allows cross-file optimizations and global inlining.-fomit-frame-pointer – Frees a register by not storing the frame pointer (makes debugging harder).

Math-related flags

-ffast-math – Enables aggressive math optimizations, ignoring strict IEEE 754 compliance (may change results).-fno-math-errno – Disables settingerrnoin math functions (removes overhead).-fno-trapping-math – Assumes math ops don’t generate traps (e.g., SIGFPE), enabling more reordering.-fno-signaling-nans – Treats all NaNs as quiet; simplifies optimizations by ignoring signaling NaNs.

Performance Numbers

After applying all of these optimizations and OpenMPI parallelisation, the performance improvements were dramatic. What once felt like an “impossibly slow” task is now almost within reach.

A benchmark run after the final round of optimizations produced the following result:

Performed 100000 biome queries in 0.028 seconds.

Throughput: 3.73 million queries per second.That’s more than a 20× improvement compared to the baseline of ~0.18 million queries per second in Part 1. This means that now we are down from 2.5years to generate the entire world, to 43 days. What’s important to note is that the final numbers are what I recieved on my own laptop. If I indeed run this on a supercomputing cluster the runtime would be reduced even further to within a few hours.

Next Steps

A natural next step is to offload the most computationally intensive part of world generation the Perlin noise evaluation to GPUs using CUDA. Perlin noise is inherently parallelizable across chunks and even across individual points within a chunk.

Some testing that I performed suggests that the average throughput over 5 runs would reach 50.36 million queries per second, a roughly 10× improvement over the current CPU-based approach.

If scaled across multiple high-end GPUs, the total biome generation for the entire world could potentially be reduced from 43 days down to just a few hours or less.

You can find the repo on my Gitlab:

Thanks for reading!

Until next time =)